Default Title

Default excerpt

Posted on 30-Jun-2025, 01 min(s) read

Default content

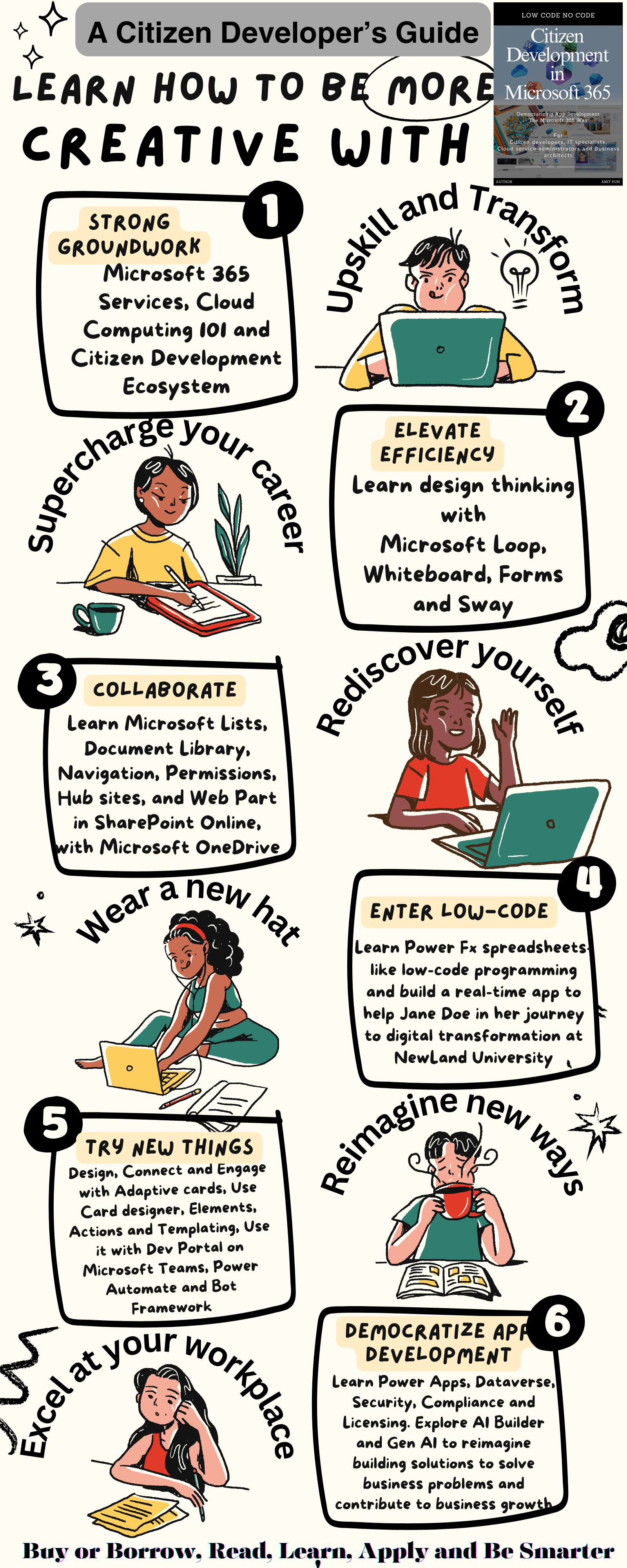

🔗 Click here to buy or borrow on Amazon Kindle

📘 Ready to empower your career? Get your guide now! ➡️

📥 Download the Infographic PDF 💾

Subscribe to get access to premium content or contact us if you have any questions.

Subscribe Now© Dr. Amit Puri - Developed with Transforming Tomorrow, One Algorithm at a Time: The AI Revolution